|

The deployment of energy-efficient hardware accelerators was used to improve significantly the performance of Cloud-computing applications and reduce the energy consumption in data centers. In the years that passed since project start, the VINEYARD vision has seen its realization as the deployment of hardware accelerators in the Cloud: in 2017, hyperscalers like Amazon, Huawei, Alibaba and Baidu offered FPGA resources to their Cloud users. |

VINEYARD developed novel energy-efficient platforms by integrating two types of hardware accelerator: A new-generation dataflow-based accelerator and a novel architecture for FPGA-based (control-flow) accelerators. The dataflow engines (DFEs) are suitable for high-performance computing (HPC) applications that can be effectively represented with dataflow graphs while the latter are used for accelerating applications that need tight communication between the processor and the hardware accelerator(s). |

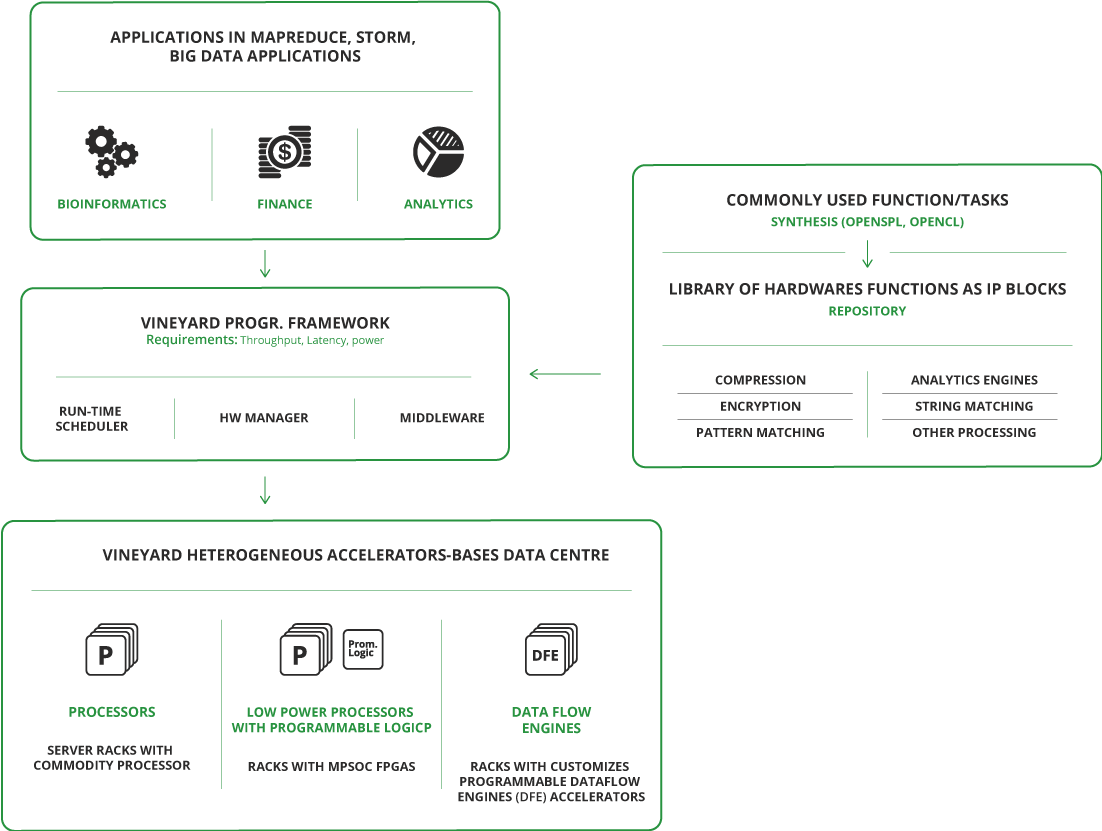

High level diagram of the VINEYARD framework

On the technology front, during the VINEYARD project, the project partner Maxeler developed the next generation of DFEs featuring a novel, high-performance architecture. The new MAX5 achieves 3x higher compute performance and 2x lower energy than previous-generation DFEs. Also, the MAX5 is fully compatible for deployment in the Cloud (e.g. compatible with Amazon AWS F1 instances).

At the same time, the project partner Bull developed many prototypes of discrete/integrated FPGA-accelerated server based on Intel’s Skylake/CascadeLake CPUs and Arria10/Stratix10 FPGAs. The transformation of one of these prototypes into a commercial product is planned for 2019. Such product will offer a low-latency communication interface between the CPUs and accelerators and will intend to evolve towards an extension of computation nodes within HPC systems.

VINEYARD developed also a high-level programming framework that allows the seamless integration of heterogeneous hardware accelerators (e.g. CPUs, GPUs, FPGAs, DFEs) in modern data centers. The VINEYARD framework allows end-users to seamlessly utilize these accelerators in heterogeneous computing systems by using typical cluster frameworks (i.e. Spark). The chief breakthrough of the VINEYARD framework lies in the ability to hide from the users the programming complexity of the heterogeneous computing system and allow them to focus on application coding.

Programming heterogeneous accelerators is a challenge since accelerator programming languages are developed specifically by the hardware vendors to take advantage of the underlying hardware. The core component of the VINEYARD programming framework is a class hierarchy that abstracts away the existence of multiple implementations of a kernel and that allows programmers to simple think about “accelerated kernels” without having to think about differences in calling conventions or details of the respective implementations.

Therefore, a VINEYARD programing model has been developed that alleviates this problem by abstracting away the underlying hardware details from the application developers. This leads to a wider adoption of accelerators resulting in higher data-center efficiency and reduced Total Cost of Ownership (TCO). Besides programming heterogeneous accelerators, scheduling applications on a heterogeneous data center is an equally crucial challenge.

Thus, a VINEYARD runtime system has been developed to schedule applications transparently and in an efficient manner, aiming at higher quality of service (QoS). The VINEYARD programming model, targeting the VINEYARD run-time system, allows the efficient and effortless utilization of hardware accelerators from typical cluster frameworks; here, specifically Spark.

|

The VINEYARD runtime system is fueled by VineTalk, the VINEYARD middleware which augments the functionality of the data-center resource manager, by enabling more informed allocation of tasks to heterogeneous accelerators. The Vinetalk front-end abstracts accelerators as Virtual Accelerator Queues (VAQs) and simplifies the use of accelerators upstream. Towards the efficient and seamless utilization of hardware accelerators, the back-end of VineTalk allows the sharing of available hardware resources and offers advanced QoS features to the application developers. |

VineTalk encompasses:

1) a scheduler, called Flexy, which distinguishes between user-facing and batch tasks,

2) a new system, called Syntix, that profiles applications running on accelerators (here: CPUs and GPUs) and decides the optimal allocation of GPU threads, and

3) a new system, called QuMan, which is a system that works on top of cluster managers, such as Mesos, and optimizes the resource allocation and assignment of tasks based on their performance profiles in order to maximize cluster utilization, given a controlled performance degradation.

VINEYARD also fostered the establishment of an ecosystem that empowers open innovation based on hardware accelerators as data-center plugins, thereby facilitating innovative enterprises (large industries, SMEs, and creative start-ups) to develop novel solutions using the VINEYARD framework. A central repository called AccelStore (www.accel-store.com) has been created that is used to host hardware-accelerator IP for use in heterogeneous computing systems. The community can upload their individual hardware-accelerator IP in AccelStore which can, in the future, be used to brokerage the licensed use of such IP by 3rd parties.

Last but not least, and as the first submissions to the AccelStore, the project has developed a comprehensive VINEYARD accelerator suite, a collection of hardware accelerators in the form of IP cores that is used to evaluate the VINEYARD framework on real use-case applications.

VINEYARD demonstrated the advantages of its approach on three real use-cases:

A) Neurocomputing applications;

B) Financial applications; and

C) Data-analytics application.

A) In the domain of neurocomputing, performance evaluation involved the acceleration of the Inferior-Olive simulator and the flexHH library as ported onto CPU-based accelerators (Intel Phi KNL) and DFE-based (Maxeler-based platforms) accelerators. Results indicate speedups from 11x to 36x to contemporary solutions, a staggering improvement paving the way for more powerful, model-based brain exploration.

|

Furthermore, for the neurocomputing applications, the BrainFrame integrated Cloud service for neuroscientific computing has been developed by Neurasmus. BrainFrame draws upon the VINEYARD knowhow and is the first to allow technically non-savvy computational (neuro)scientists to seamlessly utilize powerful heterogeneous HPC resources from a user-friendly GUI. BrainFrame introduces many novelties in the field, featuring a modular and highly expandable system architecture based on containers and Docker Swarm, enabling the seamless scalability (i.e. elasticity) of Cloud-based hardware accelerators towards large-scale brain simulations and allowing the user to select the optimum hardware-resource configuration based on the applications requirements in terms of cost (power) or performance (execution time). |

|

BrainFrame also offers compatibility with old tools (e.g. NEURON, NEST, PyNN, NeurML) and uniquely supports real-time feedback of the simulations. Such features shorten significantly the experimentation cycle (theory-experimentation-observations), from days to hours and minutes. BrainFrame has been deployed, for now, on a commercially available heterogeneous Cloud infrastructure (Amazon AWS) and has been integrated with the hardware accelerators developed in VINEYARD.

B) In the case on financial applications, the project assessed the acceleration of risk-valuation calculation formulas, as well as of order-commuting functions - financial protocols. Hardware accelerators have been integrated into the trading system and overall performance evaluation showed that the accelerators can provide up to 6.12x speedup compared to contemporary processors. The integration of the hardware accelerators has been performed through the VineTalk interface that allows the virtualization and the sharing of the hardware accelerators.

C) In the domain of Database and data-analytics workloads, the VINEYARD accelerators yielded speedups up to 12x compared to contemporary processors for tasks like sorting, filtering, hashing and encryption. The specific applications can achieve significant speedup only to platforms where the accelerator is tightly connected to the processor (e.g. Xeon + Arria10 devices).

All of the frameworks developed in VINEYARD are available at https://github.com/vineyard2020